Are you curious about how computers can “learn” and make accurate predictions just like humans? The secret lies in Neural Networks, a model inspired by our own brains – the seat of intelligence and complex information processing.

Join Click Digital as we explore the journey from the early ideas to the explosion of Deep Learning, all built upon the robust foundation of Neural Networks.

Table of Contents

The Origin of Artificial Neural Networks

How do we create an algorithm as intelligent as a human? Can we learn from how the human brain works to build intelligent machines? The answer lies in Neural Networks – artificial neural networks.

The human brain, a marvel of nature, is made up of billions of interconnected nerve cells called neurons. Each neuron has three main components:

- Dendrite: Receives electrical signals from other neurons.

- Nucleus: Processes the signals.

- Axon: Transmits signals to other neurons.

The dendrite receives input, the nucleus processes it, and the axon transmits the output. This may sound simple, but billions of neurons interconnected create a complex system that enables us to think, learn, and perform complex tasks.

Inspired by our understanding of the brain, we created the artificial neuron unit:

The artificial neuron unit is a simplified version of a biological neuron. It receives input as one or more real numbers, processes them through a function, and outputs another real number.

This processing function often combines a linear function with an activation function. The linear function calculates the weighted sum of the inputs, while the activation function limits the output to a specific range, such as between 0 and 1.

Combining multiple artificial neuron units creates an artificial neural network (ANN). ANNs are expected to be able to handle complex tasks and make accurate predictions from initial data.

However, it’s important to note:

- Human knowledge about the brain is still limited. We’re still uncovering the secrets of the brain.

- Blindly imitating the biological characteristics of the human brain may not create “intelligence.” Instead, we need to focus on building effective engineering principles and design principles.

- Despite their simplicity, artificial neuron models can create powerful algorithms that are efficiently applied in various fields.

Comparison of Neural Networks and the Human Brain

| Feature | Human Brain | Neural Network |

| Structure | Billions of neurons interconnected, forming a complex network. | Artificial neuron units arranged in layers and connected according to a network architecture. |

| Information processing | Electrical signals are transmitted through neurons and processed by the nucleus. | Real numbers are transmitted through neuron units and processed through linear functions and activation functions. |

| Learning | Learning based on experience and interaction with the environment. | Learning through training on large datasets, adjusting the weights and biases of neuron units. |

| Capability | Thinking, learning, emotions, creativity… | Processing information, classification, prediction, recognition… |

| Advantage | Ability to process complex information, adapt to changing environments. | Ability to learn from large datasets, highly efficient for specific tasks. |

| Disadvantage | Limited computational speed, susceptible to emotions, prone to errors. | Requires large datasets for training, prone to overfitting. |

Deep Learning – The Rise of Neural Networks

Neural Networks have been around since the 1960s, but only truly exploded around 2005 with a new name: Deep Learning.

Why now?

- Big Data: The explosion in data due to the rise of the internet, mobile phones, and digitalization has created opportunities for Deep Learning. Neural network models, especially deep networks, can efficiently exploit this massive amount of data to learn hidden patterns within the data.

- Computational Power: The development of powerful processors, especially GPUs, has provided the computational capacity needed to build complex Deep Learning algorithms. Training deep networks often requires immense computation, and GPUs have become essential tools in building and training Deep Learning models.

It’s clear that Big Data and computational power are the two main drivers behind the explosion of Deep Learning.

How a Neural Network “Infers”

To better understand Neural Networks, let’s delve into how a Neural Network “infers” or makes predictions.

Example:

Imagine you want to predict a student’s chance of getting into a specialized school based on their scores in three subjects: Math, Literature, and a specialized subject.

Prediction Formula:

Probability = σ(w1 * x1 + w2 * x2 + w3 * x3 + b)

Where:

- x1, x2, x3: Scores in the three subjects (Input).

- w1, w2, w3: Weights for each subject.

- b: Bias.

- σ(x) = 1 / (1 + e^-x): Sigmoid function.

Let’s break down the steps:

- Calculate the weighted sum: Multiply each score by its corresponding weight and add the products.

- Add the bias: Add the bias b to the result above.

- Apply the Sigmoid function: The Sigmoid function converts the result into a real number between 0 and 1, representing the probability of admission.

Some terminology:

- Weight: The coefficient for each input, determining the impact of that input on the output.

- Bias: A parameter that adjusts the output, allowing the model to “shift” the output up or down.

- Activation: The output of a neuron, usually calculated using the activation function.

- Activation function: A mathematical function that converts the calculated result into the desired output.

In general, the model above can be considered a neuron in a Neural Network.

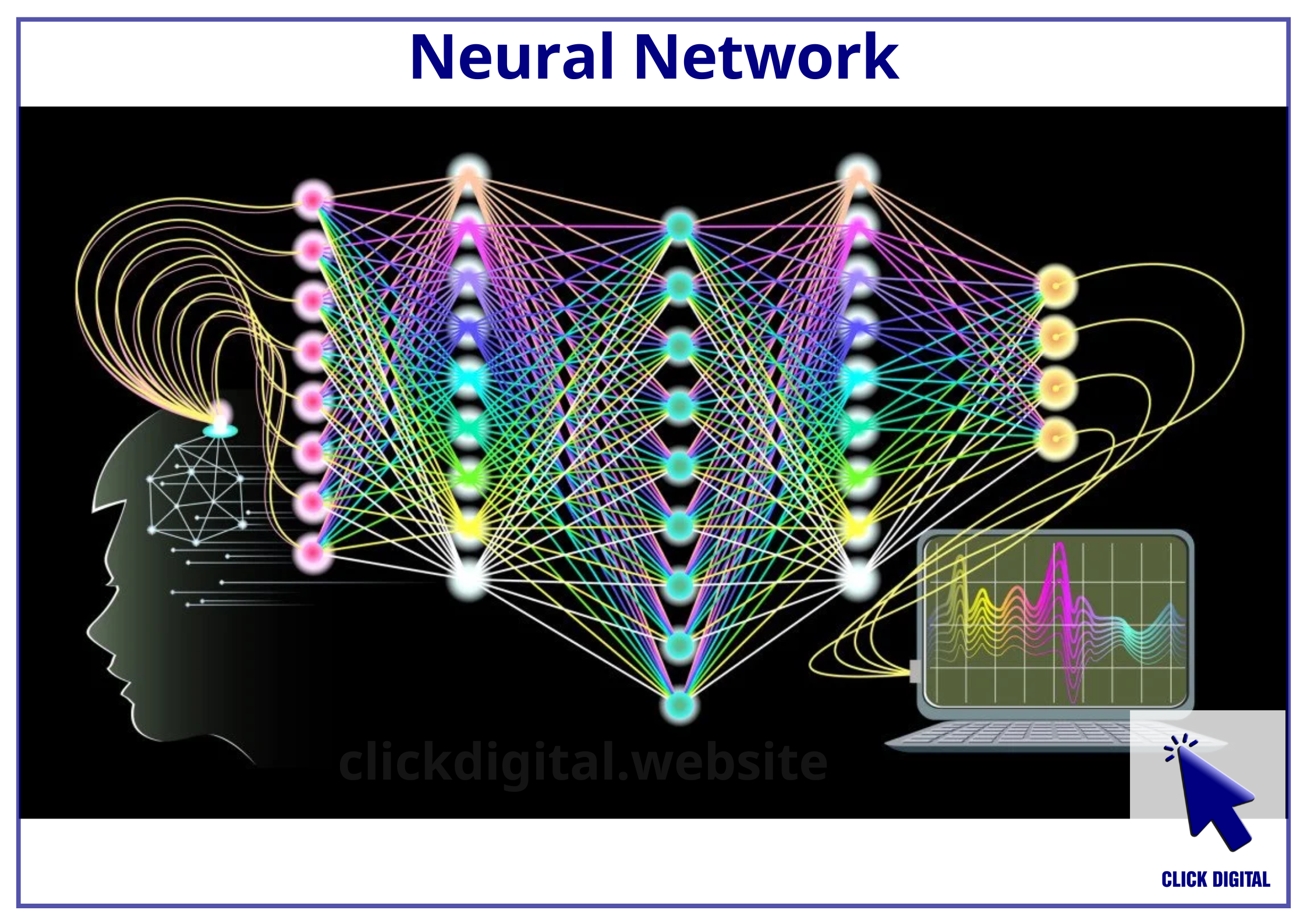

The Architecture of a Neural Network

Neural Networks are made up of many interconnected neurons. These neurons are arranged in layers, with each layer receiving input from the previous layer and outputting results to the next layer.

Some concepts:

- Layer: A set of neurons that receive the same inputs from the previous layer and output the same values to the next layer’s input.

- Input layer: The first layer, which receives the model’s input.

- Output layer: The last layer, which outputs the model’s prediction.

- Hidden layers: Layers between the input and output layers.

- Dense layer (fully-connected layer): A type of layer where each neuron is connected to all neurons in the previous layer.

A Neural Network must have at least one input layer and one output layer, and it can have zero, one, or multiple hidden layers. The number of layers in a Neural Network determines its “depth,” leading to the term Deep Learning.

Comparison of layer types in Neural Network:

| Layer Type | Description | Application |

| Input layer | The first layer, which receives the model’s input. | Receives input data, for example: images, text, numerical data… |

| Output layer | The last layer, which outputs the model’s prediction. | Outputs the predicted result, for example: classification, value prediction, text generation… |

| Hidden layers | Layers between the input and output layers. | Process and extract hidden features within the data, helping the model make accurate predictions. |

| Dense layer (fully-connected layer) | A type of layer where each neuron is connected to all neurons in the previous layer. | Commonly used in many applications, capable of learning complex relationships between input and output. |

| Convolutional layer | A type of layer that uses filters to extract local features within images. | Suitable for image processing tasks such as object recognition and image classification. |

| Recurrent layer | A type of layer that can store information from previous calculations, helping to process sequential data. | Suitable for natural language processing tasks such as machine translation and text generation. |

Comparison of common Neural Network types

| Network Type | Description | Advantages | Disadvantages | Application |

| Multilayer Perceptron (MLP) | An artificial neural network with multiple hidden layers, fully connected between neurons in the layers. | Common, easy to understand and implement. | Can overfit, difficult to train with large data, not suitable for data with complex structures. | Classification, prediction, regression. |

| Convolutional Neural Network (CNN) | A neural network specialized for image processing, using convolutional layers to extract local features within images. | Highly efficient for image processing, ability to learn spatial features of images. | Requires large datasets for training, difficult to adjust network architecture. | Object recognition, image classification, image segmentation. |

| Recurrent Neural Network (RNN) | A neural network specialized for processing sequential data, using recurrent layers to store information from previous calculations. | Highly efficient for natural language processing, ability to learn relationships between elements in a sequence of data. | Difficult to train, prone to gradient vanishing or exploding. | Machine translation, text generation, sentiment analysis. |

| Long Short-Term Memory (LSTM) | A variant of RNN designed to solve the problem of gradient vanishing and exploding, using gates to control information flow within the network. | Solves the problem of gradient vanishing, better long-term memory than RNN. | More complex than RNN, still has limitations. | Machine translation, text generation, sentiment analysis. |

| Generative Adversarial Network (GAN) | A neural network consisting of two parts: a generator and a discriminator, competing with each other to generate fake data that resembles real data. | Ability to generate very realistic fake data, applicable in many areas. | Difficult to train, prone to mode collapse, instability. | Image generation, video generation, music generation. |

| Autoencoder | A neural network trained to reconstruct the input data, which can be used to extract hidden features within the data. | Ability to learn hidden features of data, can be used for other tasks such as dimensionality reduction and classification. | Performance depends on network architecture and training data. | Dimensionality reduction, classification, anomaly detection. |

| Transformer | A neural network based on the attention mechanism, allowing the network to learn relationships between elements in a sequence of data. | Highly efficient for natural language processing, ability to learn contextual relationships. | More complex than traditional neural networks, still has limitations. | Machine translation, text generation, text classification. |

This table provides an overview of common Neural Network types, giving you a better understanding of the strengths, weaknesses, and applications of each type.

Choosing the right type of network depends on the characteristics of the data, the objectives of the task, and the available resources.

Comparison of common activation functions

| Activation Function | Formula | Advantages | Disadvantages |

| Sigmoid | σ(x) = 1 / (1 + e^-x) | Output is in the range (0, 1), easy to interpret results. | Can suffer from gradient vanishing when input is too large or too small. |

| ReLU | f(x) = max(0, x) | Solves the problem of gradient vanishing, faster computation than sigmoid. | Can suffer from gradient dying when input is negative. |

| Tanh | tanh(x) = (e^x – e^-x) / (e^x + e^-x) | Output is in the range (-1, 1), can perform better than sigmoid in some cases. | More complex calculation than sigmoid. |

| Softmax | softmax(x)_i = exp(x_i) / sum(exp(x)) | Converts the input vector into a probability distribution, suitable for multi-class classification tasks. | Can make it difficult to interpret results. |

Summary of common terminology in Neural Network

| Terminology | Definition | Example |

| Neuron | The basic unit of a Neural Network, which receives input, processes information, and outputs results. | A neuron in the hidden layer receives input from neurons in the input layer, processes information, and transmits the output to neurons in the next hidden layer. |

| Weight | The coefficient assigned to each input, determining the influence of that input on the neuron’s output. | The weight of a neuron in the hidden layer could reflect the importance of a particular pixel in the input image for predicting the outcome. |

| Bias | A parameter that adjusts the output of the neuron, allowing the model to “shift” the output up or down. | The bias of a neuron can be adjusted to increase or decrease the probability of prediction by the model. |

| Activation function | A mathematical function that converts the calculated result within the neuron into the desired output. | Sigmoid, ReLU, Tanh… are used to limit the output of a neuron within a certain range. |

| Layer | A set of neurons that receive the same inputs from the previous layer and output the same values to the next layer’s input. | Input layer, hidden layer, output layer. |

| Dense layer (fully-connected layer) | A type of layer where each neuron is connected to all neurons in the previous layer. | Commonly used in many Neural Network applications. |

| Convolutional layer | A type of layer that uses filters to extract local features within images. | Used in image processing tasks such as object recognition and image classification. |

| Recurrent layer | A type of layer that can store information from previous calculations, helping to process sequential data. | Used in natural language processing tasks such as machine translation and text generation. |

| Forward propagation | The calculation process in a Neural Network from the input layer to the output layer, based on the trained weights and biases. | The model receives input, calculates the output of each layer, and transmits the output of the previous layer to the next layer. |

| Backpropagation | An algorithm for calculating the gradient of the loss function, helping to update weights and biases to optimize the model. | The backpropagation algorithm is used to “learn” from data and improve the model’s prediction performance. |

| Loss function | A function that measures the error between the model’s output and the true label, used to evaluate the model’s performance. | Mean Squared Error (MSE), Cross-Entropy… are used to measure errors in different tasks. |

| Gradient descent | An optimization algorithm that helps find the optimal values of weights and biases in a Neural Network model, aiming to minimize the value of the loss function. | The Gradient Descent algorithm is one of the most common algorithms used to train Neural Network models. |

| Overfitting | The phenomenon where the model learns too well on the training dataset, leading to poor performance on the test dataset. | Overfitting can occur when the model is too complex compared to the training data. |

| Regularization | Techniques to reduce overfitting by adding constraints or penalties to the model’s weights. | L1 regularization, L2 regularization… are common techniques to control the complexity of the model. |

Comparison of Neural Network training methods

| Method | Description | Advantages | Disadvantages |

| Gradient Descent | A basic optimization algorithm, calculating the gradient of the loss function and updating weights and biases in the direction that reduces the loss value. | Simple, easy to understand and implement. | Can get stuck in local optima, slow convergence. |

| Stochastic Gradient Descent (SGD) | Instead of updating weights and biases on the entire dataset, SGD uses a small random sample of the data for updates. | Faster convergence than Gradient Descent. | Can cause noise during training, prone to getting stuck in local optima. |

| Mini-Batch Gradient Descent | Combines the advantages of Gradient Descent and SGD, using a small group of data (mini-batch) to update weights and biases. | Fast convergence, reduces noise during training. | Requires choosing the appropriate mini-batch size. |

| Momentum | Adds a momentum term to the process of updating weights and biases, helping to increase convergence speed and reduce the phenomenon of getting stuck in local optima. | Increases convergence speed, more stable than SGD. | Can make it difficult to adjust the momentum parameter. |

| Adagrad | Adjusts the learning rate for each weight and bias, helping to speed up convergence and reduce the phenomenon of getting stuck in local optima. | Automatically adjusts the learning rate, suitable for cases with many variations. | Can converge slowly in the early stages of training. |

| RMSprop | Addresses the slow convergence issue of Adagrad by using an exponential moving average to calculate the learning rate. | Faster convergence than Adagrad, more stable. | Requires choosing the appropriate decay rate parameter. |

| Adam | Combines the advantages of Momentum and RMSprop, using both momentum and exponential moving average to calculate the learning rate. | Fast convergence, stable, suitable for various types of data. | Can overfit if not properly adjusted. |

This table provides an overview of common training methods in Neural Networks, giving you a better understanding of the methods used to avoid overfitting and improve the model’s generalization capability.

Choosing the appropriate regularization technique depends on the characteristics of the data, the network architecture, and the training goals.

Comparison of common Deep Learning applications

| Area | Application | Example |

| Natural Language Processing (NLP) | Machine translation, text generation, sentiment analysis, question answering, text summarization. | Google Translate, ChatGPT, Sentiment analysis tools, Chatbots, Text summarizers. |

| Image Recognition | Image classification, object recognition, image segmentation, image generation, image search. | Google Photos, Face Recognition systems, Self-driving cars, Image generators, Image search engines. |

| Music | Music generation, music classification, music recommendation, music analysis. | AI music composers, Music classification software, Music recommendation services, Music analysis tools. |

| Healthcare | Disease diagnosis, early disease detection, personalized treatment, medical image analysis. | Medical imaging analysis tools, Disease prediction models, Personalized medicine applications. |

| Finance | Fraud detection, stock market prediction, risk management, investment recommendation. | Fraud detection systems, Stock market prediction models, Risk management tools, Investment recommendation systems. |

| Cybersecurity | Network attack detection, malware classification, network behavior analysis. | Intrusion detection systems, Malware analysis tools, Network traffic analysis tools. |

| Education | Learning support, personalized education, student assessment. | Online learning platforms, Personalized learning systems, Automated grading systems. |

| Manufacturing | Automation, quality control, fault prediction. | Automated production lines, Quality control systems, Predictive maintenance systems. |

| Retail | Product recommendation, personalized advertising, customer behavior analysis. | E-commerce recommendation engines, Personalized advertising platforms, Customer behavior analysis tools. |

| Transportation | Self-driving cars, traffic control systems, traffic prediction. | Self-driving cars, Traffic control systems, Traffic prediction models. |

This table provides an overview of the diverse applications of Deep Learning in various fields.

Deep Learning is becoming increasingly widely applied and brings significant benefits to human life.

Example: Handwritten Digit Recognition

Let’s try solving the handwritten digit recognition problem using Neural Networks.

Input: A black and white image (28×28 pixels) of a handwritten digit.

Output: A number (0-9) that accurately reflects the handwritten digit in the image.

The image is represented as a 2D matrix with 28 rows and 28 columns, with pixel values ranging from 0 (black) to 1 (white).

Model architecture:

The model has 3 dense layers, the first with 16 units, the second with 16 units, and the last with 10 units, for a total of 42 neuron units.

Key points:

- The weights and biases are automatically “learned” by the model using the gradient descent algorithm.

- The model automatically finds the necessary features of the image to make accurate predictions.

The “inference” process:

- Forward propagation: The model calculates within each unit, layer by layer from left to right, with the subsequent layer calculating based on the results of the previous layer.

- Output layer: 10 units correspond to 10 predicted digits. The unit with the highest value (closest to 1) represents the model’s prediction.

Simulation of the prediction process on the 3Blue1Brown website:

Conclusion

Neural Networks, the cradle of Deep Learning, are a promising and rapidly developing field. They have revolutionized many industries, from natural language processing and image recognition to healthcare, finance, and many other areas.

However, Neural Networks are also a complex field, requiring in-depth knowledge of mathematics, statistics, and computer science. Understanding Neural Networks, training algorithms, network architectures, and real-world applications is crucial for maximizing its potential.

The development of Neural Networks and Deep Learning is opening up new opportunities for humans, while also posing challenges that need to be addressed in the future.

Final Thoughts

Neural Networks are a complex and promising field. This article is just a brief introduction to the concept and history of Neural Networks. To gain a deeper understanding of this field, you need to explore further into training algorithms, common network architectures, and real-world applications of Deep Learning.

The “self-learning” capability is what makes Neural Networks powerful, making them the foundation of Deep Learning and widely applied in many areas.

Do you want to learn more about how Neural Networks “learn”? Keep an eye on Click Digital’s future articles to explore more about Deep Learning!